Reading the Scientific Study to Understand a Pancreatic Cancer Treatment

When you’re a patient or a loved one, trying to make sense of a scientific study is a lofty goal.

Not only do you have to understand basic medical terminology, you also have to be able to analyze data and understand why the authors arrive at their conclusions—and whether you agree with them.

“In today’s world, everyone wants a quick answer,” says Anna D. Barker, Ph.D., chair of the American Association of Cancer Research Scientist↔Survivor Program; co-director of Complex Adaptive Systems Initiative, director of the National Biomarker Development Alliance, director of Transformative Healthcare Knowledge Networks and professor of Life Sciences at Arizona State University. The trouble is, news headlines only scratch the surface when it comes to study findings, and the conclusions they draw are often misleading—think of all the foods you read about that allegedly cause cancer in one study and are healthy to eat in another, according to news headlines. If you do try to access and read the full study, it can feel a bit like trying to read a story in a foreign language when the only words you know are “hello” and “thank you.”

Breaking Down the Scientific Study

Most people consult “Dr. Google” before they even see a study abstract or journal article. A better bet: Search PubMed, which provides access to Medline, the National Library of Medicine’s database. If you just want to get the gist of whether a study is meaningful, reviewing the abstract on Medline may be sufficient. But when you’re researching treatments on study findings, it’s critical to review the entire study. Here’s how:

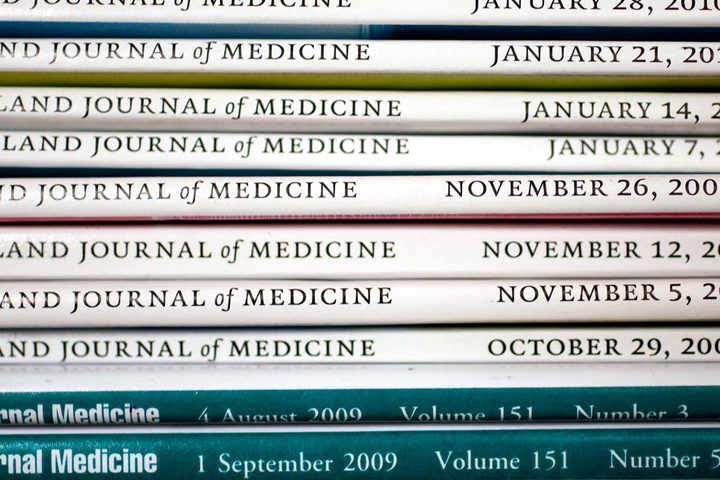

Look at the journal. If a study is published in a top-tier publication, such as the New England Journal of Medicine (NEJM), the Journal of the American Medical Association (JAMA), Cancer Research, or Journal of Clinical Oncology, you can be confident there’s a peer review process in place to ensure authors designed and conducted a solid study. Unfortunately, there are plenty of pseudo-journals that don’t require outside experts to review studies prior to publication.

Check the study design.

Some studies are designed to explore the relationship between two (or more) things. Others compare the effectiveness of different therapies. Still others look for patterns that relate to specific health outcomes. In every case, to analyze the quality of a study, ask yourself three key questions:

- Do they start with a good question? “For a study to be really good, you have to start with a relevant clinical question,” says Barker. “So, if the study answers a particular question, will the answer impact patients?” Take this NEJM study testing whether FOLFIRINOX (combination chemotherapy with fluorouracil, leucovorin, irinotecan, and oxaliplatin) leads to longer overall survival than gemcitabine. It’s a simple straightforward question and it has meaning in a clinical setting.

- Do the authors have an experimental design that makes sense given the question they chose to ask? In the NEJM study, authors randomly assigned 493 patients who had their pancreatic cancer tumors surgically removed to receive either a modified FOLFIRINOX regimen or gemcitabine for 24 weeks. With pancreatic cancer, the focus should be on prospective trials (like this one), where researchers follow patients over a period of time. “Retrospective studies that look back at a group of patients and try to draw conclusions are subject to bias and confounding,” explains Andrew Ko, M.D., a professor of medicine at the Helen Diller Family Comprehensive Cancer Center, at University of California in San Francisco, and an American Society of Clinical Oncology (ASCO) expert.

- Are the methods clear? Are samples required by the study collected in an unbiased way? And can someone else repeat the research using the information in the methods section?

Watch the numbers.

- Phases: For clinical trials, the larger the phase number, the more rigorously tested and reliable the result. Phase I and phase II trials usually focus more on safety—whether there are too many bad side effects—than effectiveness of a particular therapy. Phase III trials compare new therapies to either an existing therapy or a placebo (a.k.a., dummy pill) and are conducted in larger groups of patients.

- Sample size: The bigger the number, the more likely the results are generalizable to a larger population.

- P value: The smaller the number, the more likely the results are “real” and not based on chance. The p value for statistical significance in scientific research is usually less than 0.05, though more rigorous studies aim for a p value that is less than 0.01. “Still, there’s a difference between what may be statistically significant and what is clinically meaningful,” says Ko.

- Effect size: The effect size homes in on the size of the effect researchers are measuring. A study might yield a statistically significant finding with a low effect size that doesn’t offer any significance outside of the clinic. So, for example, if a new drug reduces blood pressure by one point, that point difference may be meaningless in real life.

Look at the end point.

With pancreatic cancer studies, the typical end point is disease-free survival, as in the NEJM study where they followed subjects for six months. Secondary endpoints often include overall survival and safety. The bottom line: Patients should be followed for a period of time to ensure the results stick.

- Check for biases. Who funded the study? Did the authors report any conflicts of interest? Does the author have a vested interest in the results? If one of the authors sits on the board of the pharmaceutical company that funded the study, it doesn’t necessarily mean the study is flawed, but it may warrant some skepticism.

- Beware of toxicity. “It’s always important to look at toxicity, but especially in pancreatic cancer trials since patients are already so vulnerable,” says Barker.

Going Beyond the Abstract

Focusing exclusively on the abstract is like reading the back cover of a book. It provides the big picture (i.e., what the book is about), but it often bypasses critical points of the story. Similarly, the abstract often fails to mention limitations of the study, potential factors that may confuse the results, and key analyses.

“No study is going to be perfect,” says Ko. “But the authors should state what the flaws are and explain why those flaws don’t negate the results.”

Want to review the full study without paying a hefty $30-$35 fee? Email the corresponding author, suggests Barker. Most researchers are happy to share the full text of studies they authored.